How to build reliable AI workflows

Think of them as industrial assembly lines, not autonomous digital workers

Building an AI agent can feel like magic.

When you see an LLM execute a complex, multi-step task autonomously and produce a great result, you’re on top of the world.

But then you notice something troubling: the results can vary widely across runs—even for the same inputs. You tweak the prompt, add a few more instructions, write “IMPORTANT!” before critical points.

But no matter how clear your prompt, every execution is like watching a juggler ride a unicycle across a tightrope: you hold your breath wondering if they’ll make it or crash.

Quietly, you admit to yourself that there’s no way you can deploy this to production.

Welcome to the existential despair of trying to make AI blobs perform like reliable systems.

The issue isn’t the models. It’s about who owns the control flow. And that requires rethinking how we architect AI systems.

The need for AI task decomposition

At some point, every serious builder learns the same painful lesson: you can’t prompt your way to reliability.

Even the most disciplined prompt engineering hits a reliability ceiling when the model is managing its own reasoning and sequencing probabilistically. No amount of step-by-step clarity can compensate for lack of architectural control.

The solution is to draw on architectural patterns that systems pros have used for years. We need to think in terms of workflows and cognitive blocks—not blobs.

When I say “blob,” I don’t just mean a messy prompt. I mean any architecture where the LLM manages its own multi-step reasoning inside one call.

By contrast, a “block” is one bounded cognitive act—a single LLM call whose output we can verify before moving on.

The difference isn’t size, it’s control: in a blob, the model owns the flow; in a block system, you do.

That’s where AI task decomposition becomes critical. It’s the simple, old-fashioned idea of breaking a complex job into small, verifiable units, applied to LLM tasks.

The fallacy of autonomous “digital employees”

All the fancy “agent org charts” on social media have seeded a seductive idea: that LLMs are ready to act as full-on autonomous digital employees.

We’re told that we can give an LLM a role—researcher, analyst, writer—and then just let it figure out how to best achieve an objective.

It’s an appealing idea, but from what I’ve seen, it’s rarely the easiest path to reliability in real-world applications.

LLMs are most reliable on narrowly scoped, well-defined tasks, not at maintaining multiple concurrent objectives or reasoning paths over long contexts.

So when we ask a model to research a company, identify insights, and write an email in a single, massively-detailed blob of a prompt, we’re really asking it to juggle a dozen mental contexts at once.1

Just like a human performs better when given a clearly defined task that fits our cognitive limits around memory and reasoning, so does an LLM.

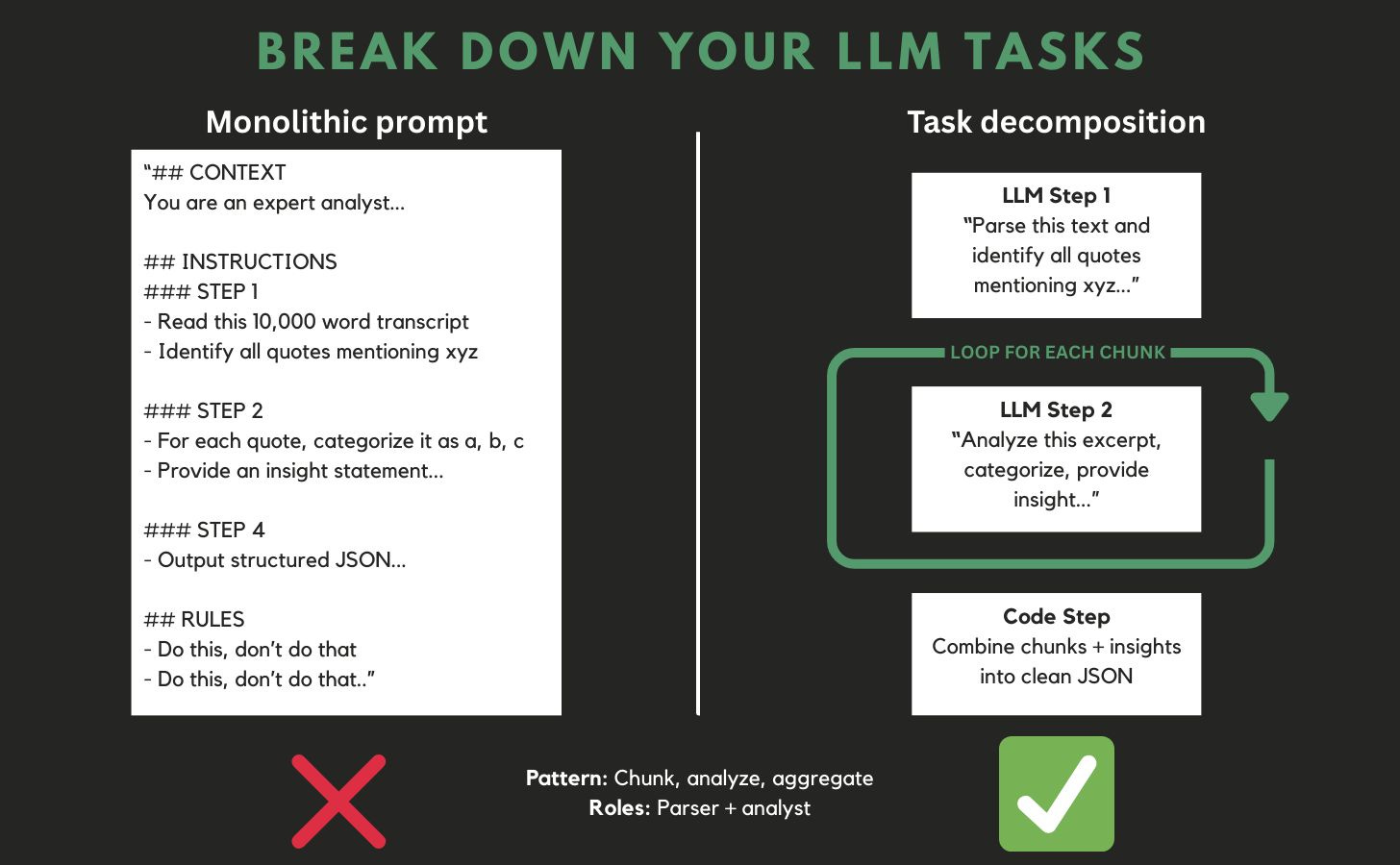

From blobs to blocks

Decomposition means breaking the blob into a pipeline of smaller cognitive acts, each with a clean contract.

BEFORE

One giant prompt:

“Read this transcript, identify all quotes mentioning X, categorize them, analyze them, and provide a summary."

AFTER

Separate LLM calls:

1. Break transcript into chunks.

2. Identify all relevant quotes.

3. For each one, categorize.

4. For each one, provide insights.

5. Synthesize into a meta-summary. Each step in the workflow is an LLM block:

bounded, deterministic, re-runnable

has a defined input and output

next step doesn’t have to guess what the last one meant—it just reads the output

We could think of this like a microservices architecture applied to AI: small, single-purpose modules with strict contracts and idempotent behavior. Each block does one thing and does it well.

Things that must be are determined by the control flow, not left to agent discretion.

The power comes from the choreography of reliable parts.

Another useful analogy is to think of AI systems as virtual industrial automation assembly lines rather than autonomous digital workers.

Even when the robot worker performs very sophisticated actions within a step, individual stations on an assembly line are highly repetitive and narrowly scoped.

Breaking it down in practice

The best way to understand decomposition is to see it at work.

Here are some examples from recent projects I’ve been tackling.

a) Call analysis

BEFORE

A detailed prompt:

“Analyze this transcript, extract the key themes and quotes, and summarize the buyer’s sentiment.”Even though this task already has a relatively narrow scope, it’s still performing multiple cognitive acts in the same task.

Sometimes it found the right quotes, sometimes it missed some, sometimes hallucinated new ones.

The insights were not acceptably consistent between runs.

AFTER

Separate LLM calls:

1. Identify every quote containing the target concept.

2. For each quote, analyze sentiment and context (loop).

3. Aggregate results and summarize themes.Now each LLM step handles one cognitive act: search, analyze, summarize.

Reliability skyrockets because the task is narrow and the LLM can focus its full context on doing it well.

b) Opportunity data analysis

BEFORE

A detailed prompt:

“Analyze all opportunities and identify the main reasons for wins and losses.”Output was again inconsistent and prone to hallucination.

AFTER

Separate LLM calls:

1. For each opportunity, classify as win/loss.

2. Analyze notes to extract key factors influencing the outcome.

3. Summarize core insights at the opportunity level.

4. Analyze pre-processed summaries to synthesize insights at the macro level.You’ll notice here how we build up to the same meta-analysis, but we do it in stages, maximizing accuracy at the atomic level and then feeding those pre-processed insights into the next LLM call to synthesize the macro view.

Because the meta-analysis step is already working with refined material (rather than reviewing raw CRM data), it can produce a much more insightful analysis.

The results of doing this have been impressive. Essentially we’ve been able to recreate many of the intuitions of someone selling the product for 5+ years, in a way that we can scale across the team.

c) Company research

BEFORE

A detailed prompt,

“Research the company by searching for recent news, hiring surges, earnings calls (etc.), identify what matters, and write an outbound email.”This agent sometimes worked spectacularly but in production only succeeded about 35% of the time.

The failures were due to hallucinations, exceeding context limits, missing important signals, or including irrelevant ones.

AFTER

News search pipeline:

1-a. For each keyword, search for articles.

1-b. For each article, run accept/reject.

1-c. For each accepted article, summarize.

1-d. For each accepted article, synthesize “why it matters.”

Financial results pipeline:

2-a. For each company, find latest earnings statement call transcript.

2-b. Find the corresponding company press release.

2-c. Break the text into meaningful chunks for analysis.

2-d. For each chunk, extract relevant quotes.

2-e. For each quote, analyze and provide relevant insights.

2-f. Synthesize all insights and quotes into a meta-analysis of those financial results.

(Repeat similarly for each relevant information source)As you can see, the research portion alone is now broken up across multiple pipelines, each with multiple discrete steps.

Summary of principles

Each of these examples follows the same rhythm: isolate specific cognitive acts, build a contract, and use a deterministic pipeline (Retool, Zapier, n8n, etc.) to route data between those blocks.

Here’s a summary of principles you can apply when breaking down any task or requirement.

Single cognitive act per step. Each unit should require one kind of reasoning—summarizing, selecting, classifying, comparing—not multiple.

Deterministic input/output. The step should have a clearly defined contract: what it takes in, what it returns.

Re-run safe. You can rerun just that step without redoing the whole workflow.

Composable. The output of one step can cleanly feed the next with no ambiguity.

Traditional microservice architecture gives us language for this. Each step has a contract, an input, an output, and can be retried without side effects. AI systems need the same discipline.

The difference is only that with an LLM our “services” are thoughts—discrete cognitive acts, each designed for one thing only.

Field evidence: reliability scales with structure

For the past several months, I’ve been applying these principles to a GTM intelligence system that collects and analyzes thousands of signals—news, social posts, call transcripts, opportunities, and more. The results have been very promising:

~99%+ execution reliability2

96% of analyses were rated meaningful and accurate by human evaluators

83% were rated comprehensive, capturing all critical information

Average insightfulness vs. human analyst score: 4.3 / 5

This platform replaced a bought system, saving us $30K in annual spend. Even more exciting than cost savings is the ability to tailor the output to our needs and produce superior results.

It’s by no means perfect, but it has me optimistic about what’s possible.

Evals and safety checks

You don’t need heavyweight evaluation at every step, but I have found it useful to incorporate a few simple checks:

Schema enforcement: use code to sanitize LLM outputs, coerce to JSON, validate keys/types, strip markdown, etc.

Content floor: require minimal payload per field (e.g., one bullet, 100+ tokens).

(Optional) LLM evaluation: for critical steps, use a quick LLM gate to evaluate previous outputs. This could also be a more robust QA agent to perform a holistic assessment.

So far this has been enough to maintain clean handoffs and ensure each block delivers what the next expects.

Tradeoffs

Every architecture has tradeoffs, and this approach is no exception.

Higher LLM costs: breaking the task down often requires inserting the same context multiple times (e.g., providing the same general background on your company or product). This consumes more input tokens, which can add up at production scale.

More time to develop: no-code agent builders make it fairly easy to create an agent. In contrast, creating robust workflows can take much longer, because you’re pre-determining the flow of steps.

Counterpoint: If you make those workflows modular, they become easily adaptable and reusable. E.g., my first call analysis pipeline took ~20 hours to build. The second use case took about 2 hours as I could re-use 90% of the architecture.

Less flexibility: Just like an assembly line, these workflows are highly templated and consistent. They won’t adapt to different requirements in real time the way an autonomous LLM agent would.

Counterpoint: When you analyze your requirements, true autonomy isn’t needed as often as we’d think.3 It’s far more common for users to expect reliable and predictable execution.

When an agent makes sense

Keeping these tradeoffs in mind, there are still many scenarios where some kind of agent architecture makes more sense than a workflow.

When the human is in the loop: conversational assistants, especially those that are not customer-facing, have a lower requirement for accuracy/consistency than lights-out automations. The human user can detect issues and help course-correct. Coding assistants are great examples of this scenario.

When requirements are open-ended: Open-ended / free-form discussion requires flexibility that a deterministic workflow can’t support. However, even in this scenario, it’s better to reduce the agent’s load as much as you can by providing workflows as tools, pre-processing data, and leveraging sub-agents.

For discovery and proofs of concept: you’ll often want to validate a use case or get a first level of analysis before investing time to build out a workflow. For example, I dumped a bunch of transcripts into Claude to help build a dictionary of themes for call analysis, which I then encoded in my workflow. It didn’t need to be perfect.

In summary

As we continue to move from AI hype-maximalism into serious system-building, I think operators need to walk back from the ledge of autonomous agents for most scenarios and get back to automation best practices.

I’m sure the day of digital workers will come, but for now, most of your leverage seems likely to be found in AI workflows.

Think about them like sophisticated AI micro-services or intelligent virtual production lines. You’ll likely find dozens of use cases you can implement quickly.

The best part is that you’ll have reliability and control, and this equates to internal credibility and real business value.

I’m referring here to simpler agent builders that focus on prompts, context, and tools.

More advanced graph-based frameworks (like LangGraph or CrewAI) provide a stateful way to break a task into components and define possible transitions between them. This allows explicit control over the flow of reasoning.

Some frameworks add planner components that dynamically choose the next step or generate sub-tasks at runtime. It’s a powerful idea for exploratory or creative work, but even those planners operate within a defined graph of nodes and transitions. The task decomposition still exists—it’s simply delegated to a controller agent. And because that agent’s decisions are probabilistic, you trade a measure of reliability for flexibility.

In practice, I find many common use cases don’t actually need this level of runtime flexibility to achieve the goal.

Here I’m referring to whether the run executes successfully or fails due to a technical error (context window limitation, API rate limit exceeded, etc.).

Although managing these errors is upstream of output quality, it is also a pre-requisite for quality output (as your quality obviously drops to zero if your execution fails).

A workflow makes it far easier to manage things like execution speed, wait steps, error handling, and exponential backoff that are critical for navigating around errors.

We can envision a not-too-distant future where advances in retrieval and memory may collapse the tradeoff between flexibility and reliability. But I think explicit control will always be foundational.