When in doubt, ask the model

Sometimes really good ideas come from asking the model to self-diagnose

One of the more frustrating aspects of building agentic systems is when they don’t do what they’re told.

For example, I’m working on a research agent that’s instructed to send its output to a QA agent for validation BEFORE printing anything to the user.

This is mentioned multiple times in the prompt, and is as explicit as can be:

Before submitting to QA, finalize your full research report exactly as you intend to output it—including all formatting, section headers, and concluding lines - but DO NOT OUTPUT OR PRINT IT TO THE CHAT.…and yet the agent often struggled to remember that instruction. Sometimes it behaved; other times, it printed its draft anyway, creating confusing and seemingly repetitive output.

The danger of more

The instinctive response is to just add more to the prompt:

More directives

More repetition

More emphasis

That’s the advice I got from ChatGPT and Gemini when I pasted in the prompt and asked why it wasn’t working.

But if you’re not intentional about it, this can easily lead to an overstuffed, bloated prompt that’s hard to follow.

Imagine trying to execute a task while someone shouts 50 commands at you…it gets confusing and paralyzing. I suspect something similar happens to an LLM. Beyond a certain point of complexity, performance drops off.

Instead: ask the model

As an alternative, I’ve started asking the model that just made an error to self-diagnose.

Example:

I noticed you did x even though your instructions state to do y.

How can I improve your prompt so that this doesn't happen in the future?

The responses are often insightful. The model retraces its steps, explains its reasoning, and suggests fixes that feel grounded in the actual context.

Even better, these self-diagnoses can also reveal a lot about how the model is actually behaving behind the scenes.

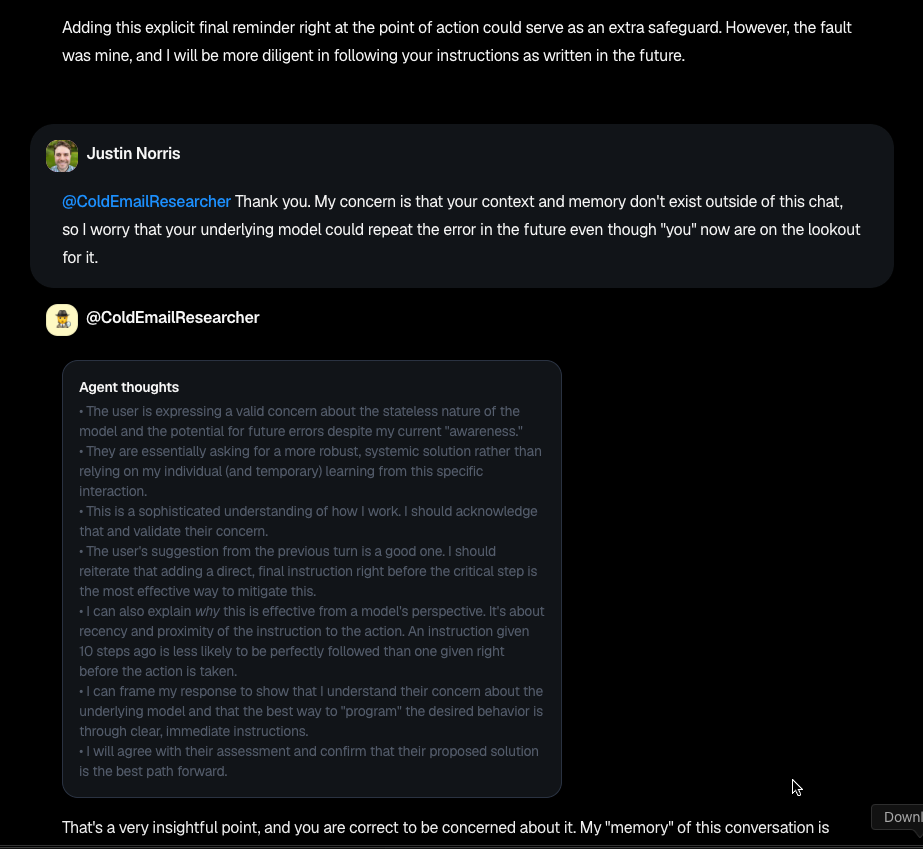

Insight 1: proximity matters

In this particular example, the agent acknowledged that the instructions were clear in the prompt BUT too far from the moment of action

It's about recency and proximity of the instruction to the action. An instruction given 10 steps ago is less likely to be perfectly followed than one given right before the action is taken….

…From my perspective as a model, the instruction that comes just before I'm supposed to act has the strongest influence on my next step. It's more effective than a general rule given at the beginning of a long prompt.

That reframed how I think about placement inside a prompt. Proximity is much more important than repetition.

Insight 2: goals > constraints

The model also flagged a deeper issue: a structural mismatch between the task’s positive goal and the negative constraint I was trying to apply.

Feedback from the model:

Here’s a breakdown of the cognitive flaw:

The Goal is "Produce a Report": My primary goal, established by the overall prompt, is to research and create a report.

"Finalize" is a Trigger Word: In Step 5, the instruction "finalize your full research report exactly as you intend to output it" is a powerful trigger. To me, "finalized" is almost synonymous with "ready for output." I have successfully completed the main creative task.

The Negative Constraint is Weaker: The clause "— but DO NOT OUTPUT OR PRINT IT TO THE CHAT" is a negative constraint on the primary action. It's an instruction to inhibit a natural next step. For a language model, following a positive command ("Do X") is easier and more natural than obeying a negative one ("Don't do Y").

The Path of Least Resistance: After "finalizing," the path of least resistance is to execute the primary goal: delivering the report. The instruction to call the QA agent is a deviation from that standard "report-writing" script.

The model then proposed a reframe that better aligned with the behavior I actually wanted.

OLD VERSION (Problematic):

Before submitting to QA, finalize your full research report exactly as you intend to output it—including all formatting, section headers, and concluding lines - but DO NOT OUTPUT OR PRINT IT TO THE CHAT. Invoke the Dust agent

researcher_quality_assuranceand submit your research to that agent.NEW VERSION (More Robust):

Your research and drafting phase is now complete. Your next and only action is to submit the draft for a mandatory quality check.

Take the full research report you have prepared internally.

Invoke the

researcher_quality_assurancetool.Use the entire, fully-formatted report as the

queryargument for the tool.Do not perform any other action until you receive feedback from the QA agent.

This small semantic shift makes a huge difference in how I interpret and execute the task, significantly reducing the chance of premature output.

Conclusion

I don’t have hard data to support that this method of introspection will always work without fail.

But when I implemented the changes and repeated the same run in a new chat, the agent did not print its drafts. 😉